Unleashing the potential of Nvidia GPU toolkit: Accelarting tasks with CUDA

Intro to CUDA Toolkit

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA for general computing on graphical processing units (GPUs). With CUDA, developers are able to dramatically speed up computing applications by harnessing the power of specific GPUs.

Talking about the CUDA Toolkit, it is a software development environment for creating high-performance GPU-accelerated applications. It includes the CUDA compiler, libraries, and other tools necessary to develop, optimize, and deploy CUDA applications.

This toolkit is available for free download from the NVIDIA website. It supports a wide range of platforms, including desktop workstations, enterprise data centers, and cloud-based platforms.

The CUDA Toolkit includes the following components:

CUDA compiler: The CUDA compiler compiles CUDA code into machine code that can be executed on GPUs.

CUDA libraries: The CUDA libraries provide a set of functions that can be used to accelerate common computing tasks, such as linear algebra, matrix operations, and signal processing.

CUDA runtime: The CUDA runtime is a library that provides support for executing CUDA applications on GPUs.

CUDA debugging and profiling tools: The CUDA Toolkit includes a number of tools for debugging and profiling CUDA applications.

CUDA documentation: The CUDA Toolkit includes comprehensive documentation that explains how to use the CUDA compiler, libraries, and other tools.

Continuing our journey, in our second blog, I'll be majorly focusing on understanding CUDA basics through some basic operations. In this blog, vector multiplication has been done in order to understand the basics working of CUDA programming. For the guys not familiar with what Vector Multiplication (in Python) is, please first refer to the following link vecMulUsingNumPy and then, come back to this blog.

A high level overview of what we're gonna learn...

In the realm of computational efficiency, the stark contrast between the processing capabilities of Central Processing Units (CPUs) and Graphics Processing Units (GPUs) becomes pronounced, emphasizing the transformative impact of parallelization. Let us embark upon the exploration of this paradigm shift by initially formulating a rudimentary function for vector multiplication, conceived to be executed on the traditional CPU architecture.

In the CPU-centric domain, the essence lies in sequential execution, where the unitary core orchestrates the computational symphony. While CPUs boast higher clock speeds and intricate core management features, their inherent limitation in parallel processing potency becomes evident. This sets the stage for the subsequent revelation of the graphical juggernaut – the GPU.

Transitioning to the Graphics Processing Unit, with its multitude of cores arranged in parallel architecture, unveils a computational prowess that eclipses the CPU's sequential finesse. The premise of vector multiplication serves as a tangible illustration, accentuating the unparalleled performance that GPUs can deliver. Despite the ostensibly lower clock speeds and the absence of certain core management functionalities, the sheer abundance of cores propels GPU performance into an echelon of its own.

Consider, for instance, the empirical evidence derived from executing 64 million substantial multiplications. The GPU's alacrity manifests as a mere 0.64 seconds, in stark contrast to the CPU's protracted 31.4 seconds. This monumental discrepancy translates into a remarkable 50-fold acceleration in processing speed, a testament to the latent power residing within the parallelized architecture of GPUs.

The ramifications of this accelerated computational landscape are profound, especially when extrapolated to complex programs. A task that might have languished for a month within the methodical confines of a CPU can now be expeditiously accomplished within a mere 14 hours on a GPU. The promise of even swifter execution looms on the horizon, contingent upon the augmentation of core counts.

In essence, the narrative woven here articulates not merely a numerical disparity but an epochal shift in the temporal dynamics of computational endeavors. The realization of intricate algorithms and computationally intensive programs, once deemed temporally prohibitive, now stands on the precipice of accelerated fruition. As we traverse the computational frontier, the GPU emerges as a catalyst, propelling us towards realms of efficiency hitherto deemed implausible.

Let us now start by first writing the code to do the multiplication under the CPU.

import numpy as np

from timeit import default_timer as timer

The first two lines of code import the necessary libraries:

numpy: This library is used for working with numerical arrays.timeit: This library is used for measuring the time it takes to execute a code block.

def multiplyVec(a,b,c):

for i in range(a.size):

c[i] = a[i] * b[i]

This code defines a function called multiplyVec that takes three arrays a, b, and c as arguments. The function performs element-wise multiplication between the arrays a and b and stores the result in the array c.

def main():

n = 64000000 #size per declared array

This code defines a function called main that sets the size of the arrays to 64 million elements.

a = np.ones(n, dtype = np.float32)

b = np.ones(n, dtype = np.float32)

c = np.ones(n, dtype = np.float32)

This code creates three arrays a, b, and c of size n and fills them with ones.

start = timer()

multiplyVec(a,b,c)

vecMulTime = timer() - start

This code measures the time it takes to execute the multiplyVec function. The timer() function is used to get the current time before and after calling the function. The difference between the two times is stored in the variable vecMulTime.

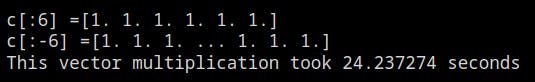

print("c[:6] =" + str(c[:6]))

print("c[:-6] =" + str(c[:-6]))

This code prints the first six and last six elements of the array c to verify that the element-wise multiplication was performed correctly.

print("This vector multiplication took %f seconds" %vecMulTime)

This code prints the time it took to execute the multiplyVec function. The %f format specifier is used to print the time in seconds.

main()

This code calls the main function to execute the code. Collectively, the code looks something like this:

import numpy as np

from timeit import default_timer as timer

def multiplyVec(a,b,c):

for i in range(a.size):

c[i] = a[i] * b[i]

def main():

n = 64000000 #size per declared array

a = np.ones(n, dtype = np.float32)

b = np.ones(n, dtype = np.float32)

c = np.ones(n, dtype = np.float32)

start = timer()

multiplyVec(a,b,c)

vecMulTime = timer() - start

print("c[:6] =" + str(c[:6]))

print("c[:-6] =" + str(c[:-6]))

print("This vector multiplication took %f seconds" %vecMulTime)

main()

The output that follws on running it is as follows:

Now, is the time to redefine the code use the vectorize method and do the multiplication under the GPU.

But before we start writing the main code, we gotta make sure that we properly execute the initial steps which are as follow:

Install the numba using the following command:

!pip install numba

And then, an environment sanity check:

!nvidia-smi

Which throws the following output:

Now, let us write the code with a step-by-step explanation:

import numpy as np

from timeit import default_timer as timer

from numba import vectorize

The first three lines of code import the necessary libraries:

numpy: This library is used for working with numerical arrays.timeit: This library is used for measuring the time it takes to execute a code block.numba: This library is used for optimizing Python code for execution on GPUs.

@vectorize(["float32(float32,float32)"], target ="cuda")

This line of code decorates the multiplyVec function with the @vectorize decorator from the numba library. The decorator tells numba to compile the multiplyVec function for execution on the CUDA platform, which is the programming model for NVIDIA GPUs. The ["float32(float32,float32)"] part of the decorator specifies that the multiplyVec function takes two arguments of type float32 and returns a value of type float32.

def multiplyVec(a,b):

return a*b

This function defines a function called multiplyVec that takes two arrays a and b as arguments. The function performs element-wise multiplication between the arrays a and b and returns the result as a new array.

def main():

n = 64000000 #size per declared array

This code defines a function called main that sets the size of the arrays to 64 million elements.

a = np.ones(n, dtype = np.float32)

b = np.ones(n, dtype = np.float32)

c = np.ones(n, dtype = np.float32)

This code creates three arrays a, b, and c of size n and fills them with ones.

start = timer()

c = multiplyVec(a,b)

vecMulTime = timer() - start

This code measures the time it takes to execute the multiplyVec function using numba's GPU-accelerated version. The timer() function is used to get the current time before and after calling the function. The difference between the two times is stored in the variable vecMulTime.

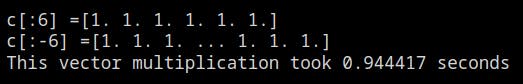

print("c[:6] =" + str(c[:6]))

print("c[:-6] =" + str(c[:-6]))

This code prints the first six and last six elements of the array c to verify that the element-wise multiplication was performed correctly.

print("This vector multiplication took %f seconds" %vecMulTime)

This code prints the time it took to execute the multiplyVec function using numba's GPU-accelerated version. The %f format specifier is used to print the time in seconds.

main()

And this code calls the main function to execute the code. Collectively, the code looks similar to this:

import numpy as np

from timeit import default_timer as timer

from numba import vectorize

@vectorize(["float32(float32,float32)"], target ="cuda")

def multiplyVec(a,b):

return a*b

def main():

n = 64000000 #size per declared array

a = np.ones(n, dtype = np.float32)

b = np.ones(n, dtype = np.float32)

c = np.ones(n, dtype = np.float32)

start = timer()

c = multiplyVec(a,b)

vecMulTime = timer() - start

print("c[:6] =" + str(c[:6]))

print("c[:-6] =" + str(c[:-6]))

print("This vector multiplication took %f seconds" %vecMulTime)

main()

The output that follws on running it is as follows:

I have prepared the template for this whole process in a google colab which you may access by clicking on the following link:

https://github.com/epsit03/working-with-nvidia-cuda

A special mention & thanks to Ahmad Bazzi as he has been my constant inspiration in getting deeper insights into the world of algorithms, CUDA programming concepts and so much more... This blog has been inspired by one of his videos on the same.

And with this, our ship has completed his another voyage in the endless oceans of ML, so hey fellow sailors, be ready for the upcoming journey and let's see where our sail takes us next...